"Models Didn’t Bring Down Wall Street; People Brought Down Wall Street Katrina Lamb | May 12th, 2009

Filed under: Business Intelligence, Market Insight | Tags: Alfred Marshall, CDOs, complexity, credit default swaps, decision-making, economic models, economics, investment banking, models, predictive modeling, probability-based recommendations, rating agencies, securities, the formula that brought down wall st, uncertainty, Wall Street, Wired magazine |

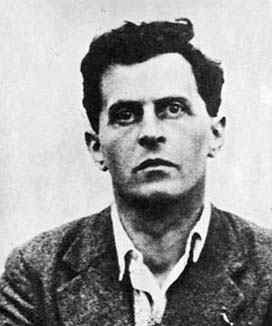

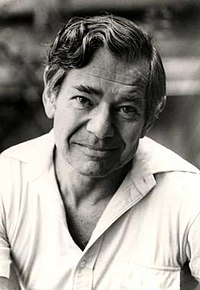

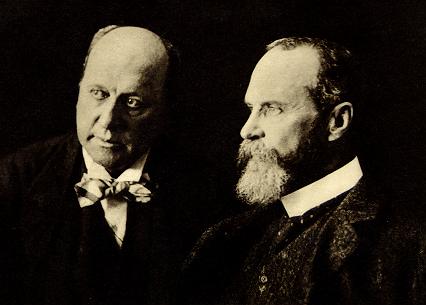

“Burn the mathematics” wrote economist Alfred Marshall in a letter to a friend, musing about the proper role of mathematics and scientific inquiry in the field of economics. That 19th century cogitation would seem to be a prêt-a-porter soundbite for these latter days of the 21st century’s first decade – a time in which the mathematical infrastructure that underpins longstanding economic and financial theories stands accused of all manner of malfeasance, particularly given its presumed role in the decade’s signature economic event – the financial market meltdown of 2008. The logic behind the accusation goes roughly thus: More complex (but not necessarily more “accurate”) models allow for more complex instruments to be created. Increased complexity means it takes more time to process and then fully comprehend what the numbers may be telling you. At the same time, though, technology allows buy and sell orders to be executed almost instantaneously through electronic trading systems. Time is of the essence, and ponderously complex computations simply won’t do. A seemingly elegant (and fast, and commercially viable) shortcut is discovered and becomes the currency of the day. The models’ outputs come to be trusted blindly simply because there is no time to question them (and too much money to be made by using them). The impenetrable Greek letters obfuscate the sensitivity of the models to changes in important assumptions – which is fine for a few years because those assumptions (e.g. rising housing prices) don’t change – but then all of a sudden they do. The models start losing more money than they make. Then the chasm widens further as the high levels of leverage in the system make themselves felt. The losses accelerate dramatically, wiping out years of profits in just a few months. Burn the mathematics, indeed.

But let’s take a different look at this apparent tight coupling of mathematics and dire outcomes. Our recent correspondence with an author who has been widely published on the subject of Wall Street’s use of mathematical models recently offered to us an interesting opinion. His point was that the problem with the models was not so much their complexity, but rather that they were models in the first place. His argument was that you can’t ever perfectly hedge model risk. Now, I agree with that observation: a model by definition selects some aspects of reality to represent and omits others, and the choice of what to include and what to omit is subject to human error, therefore fallible and not perfectly hedgable. But I take issue with the idea that the fault lies in the existence of the models themselves. Models can be misused – I think that much is clear. But the notion that models are all doomed to failure obscures a deeper truth about the goals of predictive modeling; namely that you can seek either to reduce the world or truly explain it. By trying to elegantly reduce the world to as few predictor variables as possible, you are more likely to be sowing the seeds of future failure, because complexity and actual drivers of outcomes are taken out of the equations to make them more solvable (or perhaps sellable, as in the case of the Gaussian copula function that was behind Wall Street’s demise, as we discussed in a previous posting “You Can’t Punt Away the Dimensionality Curse”). Predictive modelers don’t have to go down that road, however: they can also set out with the goal not of reducing an entire system to a single neat, tractable equation, but to quantify and explain all of the relationships that dictate outcomes to the absolute fullest extent possible. Tractability and computability are things to address later in the process, through technological means, but they should not dictate the fundamental mathematical approach at the outset.

As I see it, the problem with the financial market meltdown is not that David Li published an article in the Journal of Fixed Income Securities on the Gaussian copula function, or even that in his article Li, then an analyst with JPMorganChase, identified the price of credit default swap (CDS) contracts as a seemingly elegant proxy for the mortgage market – a proxy that greatly reduced the immense complexity of modeling values and risks in this market but, as it turned out, lost a great deal of critically important information along the way. No – the real problem was with the incremental decisions practitioners made to adopt this model wholesale, to leverage it up to 50 or more times the worth of the underlying assets, and ultimately to heedlessly employ it as a path to untold riches. In other words it was the people who used the model, not the model itself. It was the rating agencies who, in conferring the AAA ratings without which the securities would have never been as widely distributed as they were, assumed that housing prices would never go down. It was the investment bankers who successfully shouted down the warnings of their internal credit risk departments so that they could sell ever higher volumes of CDOs, with ever-higher levels of leverage, in order to maximize their year-end bonuses.

You can’t fully hedge model risk – that is true. But you can mitigate model risk through the application of robust decision-making processes. A model did not take down Wall Street. Models do not “screw up” – they do exactly what they are supposed to do once they have their inputs. The screw-ups occur solely in our application of models to inappropriate situations or to situations which we do not fully understand. The predictions may not reflect reality outcomes as precisely as we wish, but that possibility of error needs to be accounted for by the ultimate decision makers. The output of a model should be an input in any decision process, not the entire decision process. For that reason, the emerging generation of predictive technology solutions being employed by all varieties of business seeks to marry model holism (by including ALL of the relevant variables, rather than the most computationally feasible), computational firepower, and above all, ranges of probability-based recommendations, rather than a single output. It may sound heretical at the moment given the present economic calamity, but as the world gets ever more complex, models will become more valuable to decision makers, not less. Informed, prudent decision-making in regard to those models will not be a luxury, but an absolute necessity."

No comments:

Post a Comment